Many people get frustrated by the fact that miracle pills, diets and remedies are announced by scientists with regularity, yet in most cases subsequent research can’t replicate the original “breakthrough”. A recent integration of research studies on Omega-3 supplements (more commonly known as “fish oil” pills) is a case in point.

Early research seemed to indicate that fish oil pills dramatically lowered risk for heart attacks and strokes, not only in those people who had already suffered one cardiovascular event but in the rest of the population as well. A grateful nation bought the pills by the boatload.

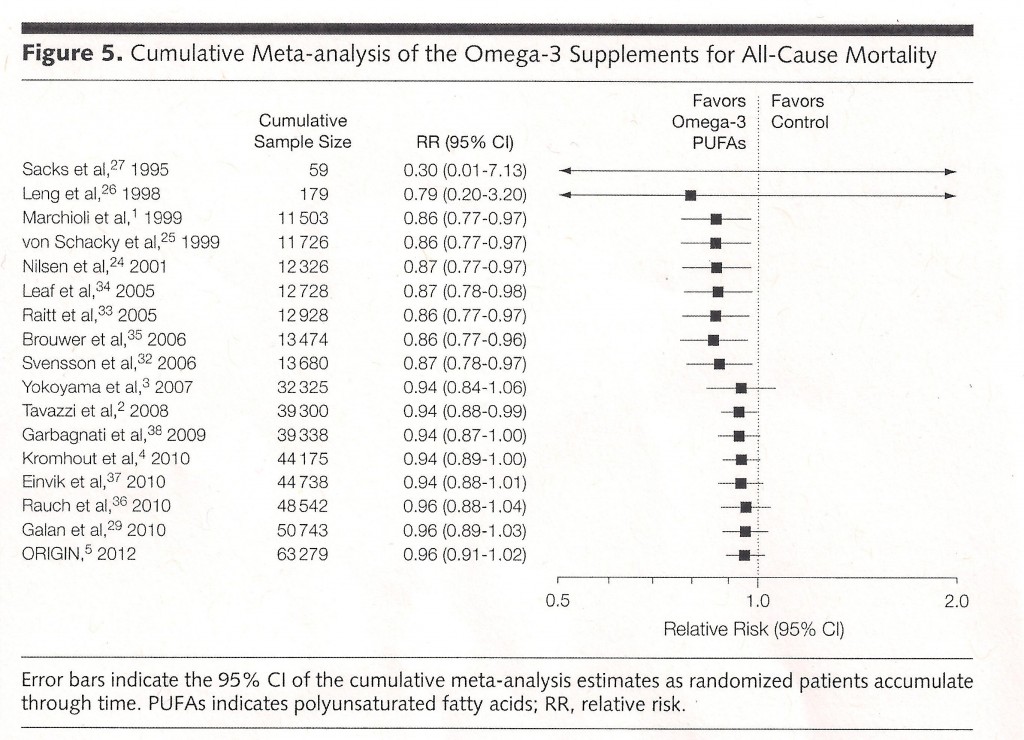

This chart from the recent meta-analysis published in the Journal of American Medical Association beautifully illustrates the deliquescence of the effects of fish oil pills on mortality as research has accumulated.

A Relative Risk (RR) of 1.0 would mean there was no benefit, and a number less than 1.0 indicates a health benefit, and a number greater than 1.0 indicates that the intervention is actually harmful. When there were only a little data available, fish oil looked like manna from heaven. But with new studies and more data, the beneficial effect has shrunk to almost nothing. The current best estimate of relative risk (bottom row of table) is 0.96, barely below 1.0. And the “confidence interval” (the range of numbers in parentheses), which is an indicator of how reliable the current estimate is, actually runs to a value slightly greater than 1.0.

Why does this happen? Small studies do a poor job of reliably estimating the effects of medical interventions. For a small study (such as Sacks’ and Leng’s early work in the top two rows of the table) to get published, it needs to show a big effect — no one is interested in a small study that found nothing. It is likely that many other small studies of fish oil pills were conducted at the same time of Sacks’ and Leng’s, found no benefit and were therefore not published. But by the play of chance, it was only a matter of time before a small study found what looked like a big enough effect to warrant publication in a journal editor’s eyes.

At that point in the scientific discovery process, people start to believe the finding, and null effects thus become publishable because they overturn “what we know”. And the new studies are larger, because now the area seems promising and big research grants become attainable for researchers. Much of the time, these larger and hence more reliable studies cut the “miracle cure” down to size.

“It is likely that many other small studies of fish oil pills were conducted at the same time of Sacks’ and Leng’s, found no benefit and were therefore not published.”

Especially if the researchers worked for commercial companies with a vested interest in good news.

It’s an important principle that the results of all publicly-funded research are published in some form, not necessarily in a peer-reviewed journal, but available on a website. (The publishers of scientific journals also have a vested interest in good or at least interesting news.)

I recall there was an initiative to ensure that the results of all clinical trials of pharmaceuticals are published. Does anybody know what happened to it?

James, you are thinking I believe of the goal of having all clinical trials registered in a publically available database. See here

http://www.clinicaltrials.gov/

From my days as a natural scientist, I remember having two great annoyances with the literature. One was the lack of reporting of null experiments (except for sexy physics nulls, like confirming the inverse square law of gravitation to low masses or distances). The other was the disdain for review articles. Good people loved reading good review articles, but good people had no incentive to write them. I remember one fellow who had a real knack for synthesizing the literature, but not much of a knack for producing new knowledge of his own. He was marginalized, even though he was advancing the ball far more than those who were a bit better than producing new knowledge, without his synthetic skills.

I am sorry to hear this but not surprised. The US has such a bias in favor of the appearance of leadership and originality that there is a lot of waste. For your friend, it’s like being a football player who’s good at one of the things that aren’t in the stats. Sort of a cousin of the kill-the-messenger dynamic that necessitates whistleblower law. We need a rule about this tendency.

And it’s the same with the huge number of nonprofits that seem to be created, just so someone can be the CEO of it, never mind that either someone else is probably already doing it, or it’s something that’s actually not that important. I read a lot of job listings and you wouldn’t believe the nonsense I see. I almost want to make a rule — if you can’t even explain why your new org needs to exist, it shouldn’t. Also, please stop with the stupid names. And the bureaucratese. (Actually, the need for “evaluation” is a big part of the doublespeak. I’m not saying we don’t need that — clearly we do — but people should just recognize that it also leads to a whole lot of b.s. and cya’ing.) As if there is something shameful about … being a part of something. No, now we all have to be “leaders.” I’m so sick of this.

I noticed your article in SF Gate correctly blames sensationalizing media for the phenomenon. While your opening sentence here seems to implicate scientists.

I’m with Brad DeLong on this one: Why or why or why can’t we have a better media?

Everyday journalism has done a great disservice to science. On a par with how they mangle politics by bending over to be fair to both sides.

The latest example being the faster-than-light neutrinos headlines (scientists were simply asking for help uncovering the flaw in the experiment): EINSTEIN ON HIS HEAD!

And before that? Media butchered global warming science by giving equal coverage to the 3% of atmospheric scientists that denied.

I still get a kick out of this site that maintains that “equal balance”: http://climatedebatedaily.com/

So the question here is why do we have such stupid journalism?

The answer is that our journalism must sell itself in the “marketplace for eyeballs”.

It’s driven by the hard realities of capitalism. To sell itself most prodigiously it tends to sensationalize the latest cautionary science.

This crap we see is literally baked into the capitalistic cake.

I am not saying capitalism can’t do journalism. It can.

But for the most part it can’t do it without putting in too much salt and smearing on too much frosting.

I noticed your article in SF Gate correctly blames sensationalizing media for the phenomenon. While your opening sentence here seems to implicate scientists.

Hi Koreyel — I would say there is enough blame to go around. Scientists and journalists sometimes enable this in each other. The best scientists and journalists resist the temptation to over-hype, but it only takes a few bad apples to fill up the public discourse in the Internet age.

As a longtime sci/tech journalist, I’m going to put a bunch more blame on the scientists’ institutions. Most science reporters don’t have the specialized knowledge required to connect the dots between some new finding and a sensational result. But the public-information officers at the scientists’ institutions do (or have the clout to badger the scientists into doing so). And they (along with the similar folks at the journals) write the press releases that get the reporters’ attention. Sure, as a reporter I could do a bunch of skeptical research and end up telling my editor “Yep, I put in a week’s work to determine there’s nothing here worth printing, but that’s not a good career move. (And the next time my editor is at a reception with some bigwig from the institution in question, there’s going to be the embarrassing “Everybody else covered our new XYZ, but I see you folks didn’t” conversation.) As long as institutions like to get their names in the press, this cycle will continue.

Meanwhile, publication bias. Which comes not only from the whole set of cognitive mechanisms designed to find patterns, but also from the reality that a lot of negative outcomes are clearly the result of having screwed up somewhere during the design or execution process.

Perhaps the problem may not so much be journalists but journalism — or the business journalism finds itself?

Just as a person who reads a lot of news, I have to side a little bit with Paul. I don’t have the energy to track down a study and pull it apart myself. But, I’d like to know what’s happening, you know?

Why isn’t there someone who does this? There should be a Consumer Reports type of organization for people like me. From what you’re all saying here, the fact that something gets printed in a respectable journal no longer means much? So, in this case, maybe we *do* need a new organization, with all manner of CEOs and what-not.

It looks to me like fish oils became significantly worse starting in 2007. Before then, when they were all manufactured here in the USA, the quality was high and they really had an effect. Since moving production to China, all sorts of contaminants are diluting the effect.

All joking aside, why couldn’t this be time-dependent? Stradivarius violins sound great because of a cold spell that made the trees denser for a few years when he was making them - maybe the composition of fish oil changed for some reason in the last 6 years? Perhaps colder oceans equate to effective fish oils?

SSS

That is all.

If a baseball player hits a home run in his first career appearance, it does not mean he is the next Willie Mays.

MobiusKlein has it.

of course something really big DID happen with fish oil pills in 2007 — the amount of available data more than doubled, giving us a much more reliable effect estimate than before.

No. But he may be the next Yogi Berra..:-)

I’ve been reading Kahneman’s excellent Thinking, Fast and Slow. He has an interesting discussion of how the greater variability of small samples leads to all sorts of logical errors.

The discussion of that review article confused me. Yes, the effect is now estimated to be smaller than before, but still: A 4 percent reduction in mortality seems like a huge benefit compared to the small cost of fish oil pills. Yes, the 95 percent confident interval includes 1.0, but it also includes 0.91 - a NINE percent reduction. And again, this is ALL-CAUSE mortality. Many people seem to get excited about treatments that cause a 20 percent reduction in conditions that account for only a tiny share of mortality.

What about the cost to the fish and to the environment of having so many fish being subject to additional threats for such a relatively puny gain to us, particularly given that there are other ways to alleviate the same problems?

I think most of the fish oil we buy in capsules is from menhaden, which are a small baitfish not directly consumed by humans. Management of the menhaden fishery in the Chesapeake Bay has been a subject of some controversy recently, but we are managing it, and it does not appear we are about to kill off the species.

Nothing in my post says that they definitely have no benefit. It could be they have a very small benefit that takes tens of thousands of cases to measure reliably (and I wonder if what is left beats having fish for dinner now and then).

On this point “Yes, the 95 percent confident interval includes 1.0, but it also includes 0.91 – a NINE percent reduction”

If you are going to ALL CAPS one end of a confidence interval figuratiely and literally, you should for accuracy do so on both ends by noting that the CI doesn’t just “include 1.0”, it goes over it meaning that it possible that fish oil pills cause a two percent increase in deaths.

Good point; I should have noted the full range of the 95 percent CI.

I also should have been clearer when referring to “the dicussion.” I wasn’t referring to your post, but the article itself and the news coverage. For example, the conclusion section of the abstract is “Overall, omega-3 PUFA supplementation was not associated with a lower risk of all-cause mortality, cardiac death, sudden death, myocardial infarction, or stroke based on relative and absolute measures of association.” That could have been written as, “Overall, no statistically significant association was found between omega-3 PUFA supplementation and a lower risk of all-cause mortality, cardiac death, sudden death, myocardial infarction, or stroke based on relative and absolute measures of association, although the central estimate of a 4 percent reduction in all-cause mortality would be clinically significant.”

And the news coverage had statements such as “the fatty acids have no impact on overall death rates, deaths from heart disease, or strokes and heart attacks.” (Reuters, http://articles.chicagotribune.com/2012-09-11/lifestyle/sns-rt-us-heartbre88b04d-20120911_1_omega-3-fish-oil-pills-fatty-acids) and “Omega-3 fatty acids, hailed by some for properties said to enhance heart health, were found to have no effect in reducing the risk of stroke, heart attack or death, according to a study released Tuesday.” (AFP, http://www.nydailynews.com/life-style/health/new-study-shows-omega-3-fatty-acids-reduce-stroke-heart-attack-risks-article-1.1158445)

Yes, the general bias is towards reporting positive findings, but in this case (but not in your post) there seemed to be an oversimplification in the other direction: “Omega-3s are useless.”

Every once in a while I read a really good article that stays with me, and the Atlantic had an article about a doctor in Greece who has focused his career on just this phenomenon:

http://www.theatlantic.com/magazine/archive/2010/11/lies-damned-lies-and-medical-science/308269/

I found it to be quite illuminating, the whole article is worth your time if you are interested, but here is the real killer finding:

“In the paper, Ioannidis laid out a detailed mathematical proof that, assuming modest levels of researcher bias, typically imperfect research techniques, and the well-known tendency to focus on exciting rather than highly plausible theories, researchers will come up with wrong findings most of the time. Simply put, if you’re attracted to ideas that have a good chance of being wrong, and if you’re motivated to prove them right, and if you have a little wiggle room in how you assemble the evidence, you’ll probably succeed in proving wrong theories right. His model predicted, in different fields of medical research, rates of wrongness roughly corresponding to the observed rates at which findings were later convincingly refuted: 80 percent of non-randomized studies (by far the most common type) turn out to be wrong, as do 25 percent of supposedly gold-standard randomized trials, and as much as 10 percent of the platinum-standard large randomized trials. The article spelled out his belief that researchers were frequently manipulating data analyses, chasing career-advancing findings rather than good science, and even using the peer-review process—in which journals ask researchers to help decide which studies to publish—to suppress opposing views.”

So much for peer review.

article about a doctor in Greece,,,

Dr. Ioannidis I am happy to say is now here at Stanford.

And he has proposed that all blockbuster drugs have mega-trials which should randomize at least 10,000 patients to each group, one group to treatment with the blockbuster and one group to the least expensive effective intervention available, for example, to a generic drug with acceptable effectiveness and safety. He thinks that for a drug with $2 billion in annual sales, one months of sales would suffice to conduct a mega-trial with 80,000 participants with four years of follow-up.

Such large trials could detect event rates that are missed in FDA licensing trials, which generally enroll only a few hundred patients and follow them for only a few months, up to a year.

There is a lot of debate about the phenomenon of publication bias, whereby studies with “positive†results are more likely to be published in journals than “negative†articles which fail to show “positive†results. Positive studies tend to be published earlier than negative studies, and this explains part of the trend seen in the diagram above.

http://f1000research.com/articles/1-59/v1 argues that journal editors shoulder much of the blame for publication bias, accepting positive studies more readily than negative studies.

http://f1000research.com/articles/2-1/v1 argues that it is the authors of negative studies who bear the responsibility for not submitting their findings for publication: the “file-drawer†problem. I think that this argument is better supported by the facts.

http://openmedicineeu.blogactiv.eu/files/2012/06/6-June-2012-Dr-P-Gotzsche-Why-we-need-to-open-up-health-research-by-sharing-our-raw-data3.pdf makes the important point that failing to publish can have deadly consequences for patients. We all remember when Merck withheld research on Vioxx causing heart attacks; this achieved infamy when it became public. Less well known is the story of lorcainide, a Class Ic antiarrhythmic drug which was tested in 1980 and shown to increase mortality in patients with heart attacks even though it reduced the frequency of serious rhythm disturbances relative to placebo. There were 48 patients randomized to lorcainide and 47 to placebo; there were 9 deaths in the lorcainide group and only 1 in the placebo group.

The authors did not submit their results for journal publication. They had a “statistically significant†reduction in the primary outcome they had designed the study to detect, namely the frequency of arrhythmias in the setting of a heart attack; they had not set it up as a study of mortality rates with lorcainide. They attributed their mortality results to chance (even though it meets conventional standards for statistical significance, with p=0.015 by Fisher’s exact test). For commercial reasons the company abandoned further development of the drug and the study was relegated to a file drawer.

The bad thing is that similar class Ic antiarrhythmic drugs came on the market in the 1980s and were in widespread use for several years before it was shown that they also reduced ventricular arrhythmias at the expense of killing lots of people. Perhaps if the lorcainide study had been published in 1980 a tragedy could have been mitigated.

John Ioannidis is indeed the rock star of this field of research, and deservedly so. This stuff may seem arcane but it really does matter to human beings who would prefer their loved ones be alive rather than dead. Congratulations to Stanford for recruiting him.

You forgot to point out that this is a pathology particularly of epidemiology.

Epidemiology is fine as a suggestive pointer towards correlations, but that is ALL it is. Until you understand the relevant physiology, you have nothing.

Unfortunately (this is going to sound cruel, but it’s the truth) epidemiology is easy, especially if you don’t care that much about the truth because you are already convinced you know it, while physiology is hard. All you need to do for epidemiology is send out some questionnaires, then fish for correlations. At a confidence level of 95% (pathetically low) that’s always possible.

http://xkcd.com/882/

Voila — paper.

To make it worse, statistics is taught as a set of recipes, and so

(a) we get people who have no idea what the SEMANTIC difference is between statistically significant and “really” significant. It may be statistically significant that two fish oil pills a day reduce heart attacks. It may even be statistically significant at p=99.999%. But if the amount of the reduction is .001 then WTF cares? A strong statistical significance does not necessarily translate into a strong medical significance. This particular example is not merely hypothetical. The claim that oat meal reduces heart attacks, as I recall, falls into this bin: the evidence is strong, but the extent of the effect is minuscule (and if you ramped up your oat meal consumption dramatically, to attempt to boost it, you’d be ramping up your intake of starch, and so your blood glucose levels, and so your insulin levels, and that would all be a lot worse for your heart than the minor positive effect, presumably of the oat bran).

Finally, IMHO, the academic world (and its analogs in other parts of the medical establishment like research hospitals and the NIH) are way too genteel on individuals who blow findings out of proportion. The lesson of Ancel Keys has been that if you bludgeon everyone in your way, suppress every data point that doesn’t meet your hypothesis, and generally act like you have a direct line to god, you will be rewarded. The lesson SHOULD be that if you behave this way you’ll be mocked by your peers, and eventually ignored. If it were common practice to shun those who talked up these ridiculous p=95% (and no physiological story) findings, perhaps we’d see less of it.

(Note. I’m not suggesting criticism of those who publish results that turn out to be wrong. Science is hard, and we all make mistakes. I AM suggesting criticism of those who insist that WEAK evidence be treated as, and spoken about, especially in public, as STRONG evidence.)

Maynard,

I’d put your third paragraph a little differently. Bad epidemiology is easy; good epidemiology is hard. And in the medical field, epidemiology is much better than what it mostly displaces-clinical impressions.

Otherwise, word.

Well, let’s all remember: an epidemiologist is just a physician broken down by age and sex.

Oh that is good.

Actually, Ioannidis focused initially on problems arising from published findings in the field of molecular genetics in his best-known essay:

http://www.plosmedicine.org/article/info:doi/10.1371/journal.pmed.0020124

Epidemiology is indeed highlighted in this 2005 paper, and its methods are used to argue the case that most published research findings are false. However, Ioannidis did think that “most research findings are false for most research designs and for most fields.†Epidemiology literature is filled with articles showing great awareness of precisely those problems associated with data-fishing, and the xkcd.com illustration is emphasized in almost any introductory statistics course. After all, it was Bradford Hill, the patron saint of the study of causation in epidemiology, who used biological plausibility as one of the considerations to use in assessing proposed causal relations. Just as oncologists are more aware than anyone else of the toxic side effects of their treatment regimens, epidemiologists and statisticians are more aware than almost anyone about the woes arising from associations which arise from artifact rather than from reality.

http://www.plosmedicine.org/article/info:doi/10.1371/journal.pmed.0050201

similarly cites the literature on molecular findings in fruit fly genetics to illustrate one of its key points. This essay is special because it illustrates the problems in terms of principles of economics, and in a blog like this, which has a high proportion contributors who are well-versed in economics, it would be interesting to see how others evaluate the application of concepts such as oligopoly, artificial scarcity, and the “winner’s curse†in auction research, to the problems of the distortion of science by current publication practices.

Thanks for mentioning Ancel Keys. That guy did a lot of damage with his rather selective studies.

Another example of how the complication of today’s world makes it hard to discover the “truth” (about the effects of animal fat on heart disease and mortality in his case).

I don’t know too much about meta-analysis, though I know a little statistics. If you showed me Figure 5 above without any other discussion, I would say that it shows distinct evidence for a small reduction in mortality rate. Yes, the CI’s for the larger studies all cross 1.0, but the probability that all of them would be well on the left of 1.0 by chance is very low. Also I thought the paper’s multiple-testing threshold for the sub-categories is very stringent (p = 0.05 / 8 tests), particularly given that some of these tests are presumably correlated with each other (eg, RR and RD for the same study). And given that all the other meta-analyses cited in eTable 2 are also to the left of 1.0, that also suggest a small beneficial effect (though obviously the meta-analyses use overlapping data and are strongly correlated). But again, I’m pretty new to this stuff, so that’s just my impression.

The CI’s on the right are for the cumulative distribution (ie, the average of all studies on that line or above), so they’re not independent. Differentiaing by eye, if you were to plot the CIs of the individual studies and just the meaningfully big ones, Marchioli would be to the left, yokoyama to the right, ORIGIN a little to the left.

Ah, of course, duh. And looking at the paper, Figure 4 is the independent results. Thanks.

So, this graph says that after 62,000 patients have been studied, we now have an estimate of 4% reduction in all-cause mortality, with about an 85% chance of the actual figure being positive? That seems almost too good to be true. How can something that cheap be that effective?

One measure of effectiveness in common use is the number needed to treat, generally interpreted as the number of patients who have to use an intervention for some period of time to prevent one unwanted event from occurring. It is calculated as the reciprocal of the absolute risk reduction, which is determined by the risk in the untreated minus the risk in the treated groups.

The death rate among all participants was about 6.17 percent. The median duration of treatment was 2 years.

With a relative risk reduction of 9%, the absolute risk of death comes down to about 5.6 percent. The risk difference is about 0.55 percent, and the reciprocal of this is 180. This figure is the number needed to treat to prevent one death. If 180 people take omega-three supplements for 2 years, it is estimated that one of them will live rather than die as a result of treatment.

This can be interpreted as saying that among the 180 people who take the supplement for 2 years, 179 are unaffected by treatment and 1 benefits, when the benefit is prevention of death.

Most of the studies were secondary prevention, meaning that they were done in people who had already had a heart attack or other cardiac event. For people who are healthy and have never had a cardiac event (primary prevention), the number needed to treat is expected to be higher than 180.

Whether you consider 180 as an excessively high number needed to treat is going to be a matter of personal values. If I had had a heart attack, I might still think that the bet was worth taking, even though chances are that it will make no difference in my 2 year survival.

Bear in mind that all this stuff is based on approximations and estimates. But numbers needed to treat do provide some additional perspective on the issues involved in decision-making. Also bear in mind that 9% relative risk reduction was sort of a best-case scenario, and 180 is likely to be an optimistic estimate of numbers needed to treat. The number may actually be infinite.

Very lucid discussion of NNT, Ed. The other question worth considering is whether any benefit is unique to fish oil pills or if it has substitutes that work as well or better at similar cost (i.e., don’t buy fish oil pills, but have fish for dinner twice a week, or go for a brisk walk every other day, or take 87mg of aspirin a day etc.).

One of the lead authors (Kromhout) of one of the studies included in the meta-analysis posits that the diminished protective effect is due to the low marginal benefit of PUFAs in patients receiving “state-of-the-art” drug treatment.

So the efficacy of PUFAs as a prophylactic doesn’t seem quite settled yet.

(Of course, another confound is whether these studies used equivalent dosing regimens)

Were the participants in these studies receiving any other treatment for heart ailments? Can one say that taking a single substance (like fish oil) has very little effect on mortality, when people may be receiving other treatments preventing mortality from heart disease (medications, bypass surgery, etc), many of which are expensive, adding to the total cost of health care in the U.S?

Also, I see that NIH/Midline Plus (last reviewed 7/2012) asserts that taking fish oil supplements “really does lower high triglycerides.” http://www.nlm.nih.gov/medlineplus/druginfo/natural/993.html

Does having a high triglyceride level not contribute to heart disease?

So, I’m wondering if we’re seeing mortality from heart disease decrease overall, due to a variety of factors (which could include better diets, better medications, etc), which could change the results of studies like these.

Thsidave and daksya have raised another important issue which relates once again to the number needed to treat (NNT). NNT depends both on the relative risk and the risk difference associated with an intervention.

Risk is the probability that an unwanted event will occur. Relative risk is the risk in one group divided by the risk in another group. Risk difference is the risk in one group minus the difference in another group. The two measures of effect are closely related, but have different implications for decision making.

Polio is so rare that it is effectively eradicated from the Western Hemisphere. Alzheimer’s disease is common. The underlying risks are different for the two diseases, and this has implications for decisions you make regarding the safety and desirability of various interventions.

If there were an injection you could have which doubled your risk of polio, but reduced your risk of Alzheimer’s disease by 10%, you ought to jump at it as fast as you can. There were zero new cases of polio in the US in 2010, but there were about 450,000 new cases of Alzheimer’s disease. If everyone in the country had the injection, it would cause zero new cases of polio but would prevent 45,000 new cases of Alzheimer’s disease. This would be a bargain, even though the relative risk of polio was doubled (RR=2.0) and the relative risk of Alzheimer’s was reduced by only 10% (a RR of 0.90). The RR would be small, but the risk difference would be enormous.

Similarly, the lower the underlying risk of an unwanted event like cardiac death in a population at risk, the smaller the risk difference will be obtained when a treatment with a known relative risk reduction is implemented. If most people with a history of heart disease are receiving effective therapies to reduce the risk of recurrence, then the risk difference associated with additional measures (like fish oil) will be smaller. Since NNT depends on risk difference (being the reciprocal of risk difference), the NNT for fish oil will increase as the underlying risk is reduced by the other treatments.

First time trying the comment link for the new website-hoping it goes through!

Ahh. Thank you, Ed.

A couple of comments:

1) The early studies were mostly on people who were not taking statins; the later ones were on people who generally were taking statins.

2) A big driver of these results is the Yokoyama study - note in the diagram this is where there is the first big move towards no significance. This was a large open-label (not blinded) study in a Japanese population of mainly women who had a very low underlying rate of cardiac mortality in the first place (and where the control group likely ate a fair amount of fish to boot).

For some further criticism of some of the studies (like DART 2) included in this paper, see

http://www.issfal.org/statements/hooper-rebuttable

My main point is that this is a controversial and complex topic, and simple explanations of publication bias may well not be the true explanation here at all. More likely there is indeed a small positive effect from omega-3 supplementation, although perhaps not on top of a statin.

Peter

deliquescence!? Really? lovely word but why would you use it here?

Because the large effect melted away over time.

Thanks for the concise write-up. The second to last paragraph, essentially about “program survival bias”, is a fact and lesson that so many biotech investors fail to learn.