I got into an interesting argument in the comments on a post I wrote on nuclear energy. Keith wrote something that draws a tangent of much wider import:

There was intense opposition to nuclear power from many activists before anyone was focused on climate change, so now there is a credibility problem for critics, i.e., “Group that always hated nuclear power on principle still hates nuclear power for new reason” isn’t persuasive to most voters.

The proposition is

that nuclear opponents changed their argument, which indicates

opportunism and bad faith, ergo many people see this as invalidating

the argument.

I challenged the

fact pattern in the comments thread there, and see no evidence of the

alleged tacking. (Any reader comments on the issue please in the

other post thread, not here). Still, let’s assume it’s true. So

what?

At first sight this

is simply an example of the ad hominem fallacy, or as the

French nicely say, “procès d’intention”. The motives and

character of the person making an argument are simply irrelevant to

its validity. One of the routine jobs of intellectuals, public or no,

is to raise the red flag on such elementary mistakes and tell their

authors to cut it out.

Up to a point, Lord

Copper. The case is more complex than with a straight logical fallacy

like petitio principii, and several strands need to be

disentangled.

Keith is undoubtedly right to think that ordinary people do weigh credibility in assessing arguments. I suspect this is part of Daniel Kahneman’s Type 1 thinking: the fast, efficient and kludgy Hare processes that allowed our distant ancestors to make quick decisions based on incomplete information. These are (though Kahneman does not make the claim) probably hard-wired into the brains of their descendants, that is us. They are in contrast with the slow and effortful Type 2 Tortoise processes of abstract reasoning. Dismissing arguments from untrustworthy sources saves time and allows us to move on.

But, says our Type 2 brain, it’s still a fallacy with a real practical downside. Dismissing tainted sources makes us miss out on some useful reasoning. This is not a remote possibility. A good example from an extremely tainted source is the Nazi opposition to smoking and cruelty to animals. As far as I can tell, this was based on sensible premises – unlike their equally correct suspicion of the austerity financial policies recommended by bankers, influenced for at least some by the belief that the banks were controlled by a cabal of sinister Jewish incubi determined to impoverish Aryan Germans (link to revolting cartoon from 1931). The term “batshit crazy” does not do justice to this evil fantasy.

Other examples are the famous Milgram and Stanford Prison https://en.wikipexperiments in psychology, which show how easy it is to get normal people to commit atrocities. As I understand it these would in their original form now be considered unethical, as the subjects are very distressed when the façade is torn down and they find out what they are capable of. The results are still valuable, and add to the obviously unrepeatable field observations of Christopher Browning on reservist SS troopers. More broadly, it is simply part of education to learn to address arguments from people you find uncongenial.

That’s one side. On the other, it is surely not required to treat tainted and reputable sources equally. Read the whole of the now famous tirade of Daniel Davies about the justifications put forward for Gulf War II:

Good ideas do not need lots of lies told about them in order to gain public acceptance. …. Fibbers’ forecasts are worthless… There is much made by people who long for the days of their fourth form debating society about the fallacy of “argumentum ad hominem”. There is, as I have mentioned in the past, no fancy Latin term for the fallacy of “giving known liars the benefit of the doubt”, but it is in my view a much greater source of avoidable error in the world.

Fair enough. So we

face a procedural dilemma. Neither full-on obedience to the ad

hominem rule nor its simple rejection seem adequate. Where do we

draw the line?

We do need to

distinguish between claims of fact and the reasoning

built on them. For facts, the legal maxim falsus in unum, falsus

in omnia is a fair guide: don’t trust liars, if you must use

their work, double-check every claim they make. But what about their

reasoning? Can’t we evaluate this independently of the

claims of fact?

If reasoning were

all syllogisms or mathematical deduction, we no doubt could. The

following real-life example is is a perfectly sound logical

inference, albeit from unacceptable premises:

- Socrates is a corrupter of youth.

- The laws of Athens say that a corrupter of youth must be put to death.

- The laws of Athens are just.

- Therefore Socrates must die.

If we disagree with the conclusion, and we do, it’s necessary to attack one or other of the premises. But in the typical case, facts are linked by inductive not deductive chains, calling on assumptions about the laws and state of nature as well as judgments of probabilities, both scientific and psychological. Would Saddam Hussein attack Israel if he had WMDs? Would the Iraqi people welcome an invading army of liberation? These are not yes/no facts.

In these complex

assessments, trustworthiness is surely relevant. We rely on experts –

doctors, statisticians, rocket scientists, economists, engineers,

intelligence analysts, reporters - to inform us how the world works,

drawing on long study or experience we can never ourselves emulate.

We have to be able to trust

them. Expert judgement is fallible, but it usually beats amateurs

picking with a pin or clicking on an ad in Facebook.

This even applies, I understand, in the higher reaches of pure mathematics, the temple of deductive reasoning, where a new proof can be hundreds of pages long or the printout of a computer program exhaustively searching thousands of cases. I recall (but cannot trace) a description of the social process of acceptance of a new proof by the mathematical community, based on trust in colleagues expert in the relevant sub-area who accept the proof on detailed examination.

Trustworthiness is not a binary concept but a scale. We may allow that complete untrustworthiness is binary, as with Daniel Davies’ proven liars. So the ad hominem problem for inductive reasoning as well as claims of fact becomes one of calibrating our trust discount in a particular case not involving such liars.

Keith rightly mentions the emotional investment some may have in an issue as a distorting factor. We cannot usually wish this away by only listening to neutral experts. The investment is not determined by the people but by the issue. The validity of Andrew Wiles’ proof of Fermat’s Last Theorem took him years of dedicated work, but there were no impassioned pro-and anti-theorem schools in the background. Colleagues found a hole in his first proof, which he calmly acknowledged, then fixed to general applause. Contrast drugs policy, abortion, and nuclear power, where passions run high on both sides. Mark Jacobson (anti-nuclear) actually sued Christopher Clack (pro-nuclear) and the National Academy of Sciences as publisher over a hostile rebuttal to his first 100% renewables scenario. Both are reputable career scientists.

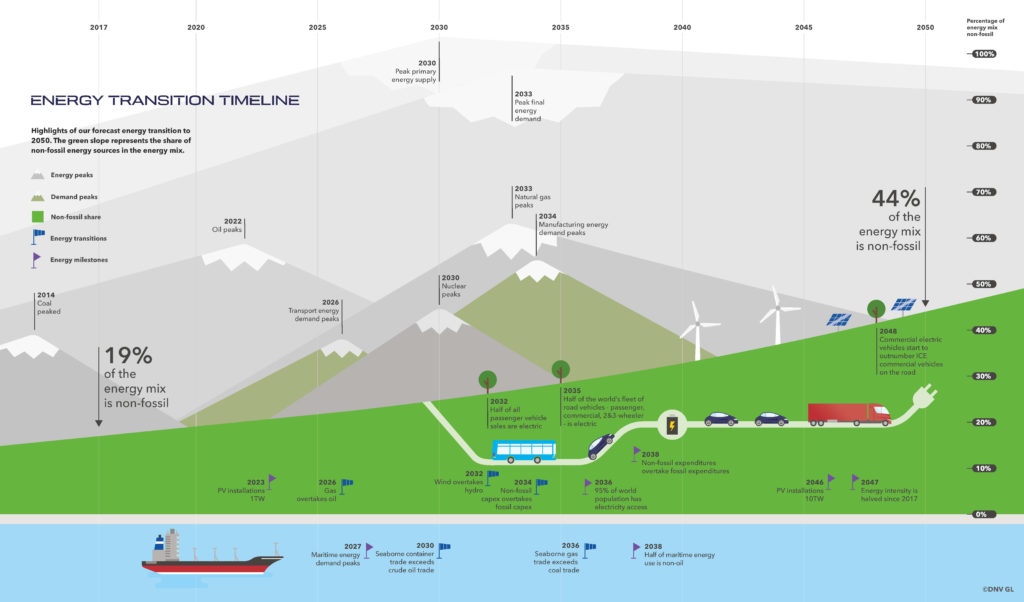

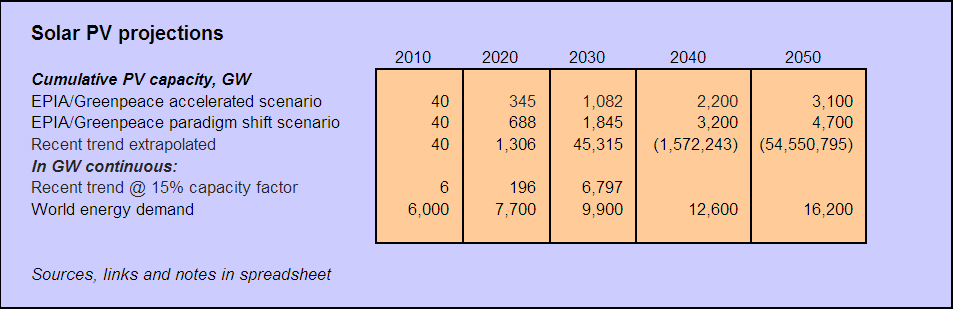

In such fields, it is generally impossible to find anybody with deep expert knowledge who does not have strongly held opinions on one side or the other of the relevant policy. Controversy and conflict are integral to the scientific and democratic processes. This applies in spades to advocacy groups, formed specifically to advance one or other policy. Greenpeace is not going to give you a sympathetic in-depth analysis of coal-mining. But its scenarios of solar deployment have consistently been much more accurate than those of professionals at the IEA.

What should the

common reader or blogger do in this situation? I can only offer

bromides.

- Eliminate known liars and hired propagandists completely from consideration, see above.

- Take into account formal credentials, institutional affiliations and possible conflicts of interest, as guides not filters.

- Check whether the author fairly represents the opposing view or sets up straw men, notes unhelpful data or brushes it under the carpet.

- Ignore tone short of abuse. Bias can hide under a façade of judicious neutrality, passion can be combined with fairness (see the model of Mark Kleiman). (This one may be a personal preference).

- Check your own bias and lean over backwards to be fair to the side you aren’t on. IIRC David Hume, when writing the Dialogues Concerning Natural Religion, wrote to theologians to be sure he was presenting the cases of Cleanthes and Demea as well as possible, assuming he was Philo himself. (Can’t confirm this, help wanted.)

- Remember that historians deal with and correct for biased sources all the time. Perhaps there is no other kind.

We now have an unsatisfactory answer to the question posed in the title: it depends. Sometimes the ad hominem rule calls for a red card (off the pitch), at others just an orange one with a dimmer (proceed with more or less caution).

Not much help? Welcome to the real world. Trust me.

[Update 30/7/2019]: A 2006 blog post by noted Australian economist John Quiggin on very similar lines.

[Update 2, 4/08/2019]: Australian conservative pundit Andrew Bot reminds us that there is another form of ad hominem attack, one that is not only fallacious but obnoxious. He devotes an entire column in Murdoch’s Melbourne newspaper the Herald-Sun to an unhinged and scurrilous personal attack on the teenage Swedish climate activist Greta Thunberg. Sample:

I have never seen a girl so young and with so many mental disorders treated by so many adults as a guru.

More here. Ms Thunberg has Asperger’s syndrome and does not conceal the fact. She shares it with several other famous people, possibly including Albert Einstein and Isaac Newton. I’m not sure what condition Andrew Bolt suffers from, but it probably ends in “-path”.

It’s a good symbol. The ring of stars evokes an ideal of common values and aspirations, as in Schiller’s Ode to Joy: “Űber Sternen muẞ er wohnen”. The empty space it defines but does not enclose invites states and citizens to fill it with the meaning and praxis they choose. Unlike the Ode, picked as the European anthem, it is not impossibly élitist for amateur use (*).

It’s a good symbol. The ring of stars evokes an ideal of common values and aspirations, as in Schiller’s Ode to Joy: “Űber Sternen muẞ er wohnen”. The empty space it defines but does not enclose invites states and citizens to fill it with the meaning and praxis they choose. Unlike the Ode, picked as the European anthem, it is not impossibly élitist for amateur use (*). The circle of stars is not standard heraldry. The one previous use I could find was the shortlived 13-star Betsy Ross flag of the American revolutionaries. This was replaced progressively by the rectangular arrangement of today, with one star for each state, as settled in 1818. There is no reason to think the Eurocrats were inspired by the Betsy Ross, or had even heard of it. So where did the 12-star European flag come from? It never corresponded to the number of members of any European institution at the times of adoption.

The circle of stars is not standard heraldry. The one previous use I could find was the shortlived 13-star Betsy Ross flag of the American revolutionaries. This was replaced progressively by the rectangular arrangement of today, with one star for each state, as settled in 1818. There is no reason to think the Eurocrats were inspired by the Betsy Ross, or had even heard of it. So where did the 12-star European flag come from? It never corresponded to the number of members of any European institution at the times of adoption. At the end of 2019, installed PV was 633 GW (Bloomberg NEF, via

At the end of 2019, installed PV was 633 GW (Bloomberg NEF, via  The French get their priorities right. The AI management software that steers the panels gives priority to protecting the vines from extreme weather, such as hail – a serious risk to high-quality vineyards in Burgundy and

The French get their priorities right. The AI management software that steers the panels gives priority to protecting the vines from extreme weather, such as hail – a serious risk to high-quality vineyards in Burgundy and

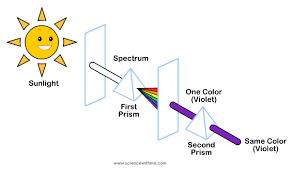

You all know the story in outline. In 1665 the bubonic plague that devastated London reached Cambridge, where Newton was a freshly minted B.A. (Cantab.) He fled to his uncle’s farm in Lincolnshire. This is now called

You all know the story in outline. In 1665 the bubonic plague that devastated London reached Cambridge, where Newton was a freshly minted B.A. (Cantab.) He fled to his uncle’s farm in Lincolnshire. This is now called