ARM Holdings of Cambridge, England, claim that that the cumulative total of the processors manufactured under their license has reached 25 billion. That´s three-and-a half Turing machines for every man, woman and child on the planet.

Turing machines? Yes. These are not special-purpose controller chips, but general-purpose programmable computer processors.

They are in principle capable of running any digital algorithm you can come up with, the definition of a Turing machine, and they can therefore emulate each other. Some algorithms never stop, and others won´t stop before the universe runs out of heat, but that´s a petty detail. From the great height of a mathematical logician like Alan Turing of King´s College, Bletchley Park and (to Britain´s shame) Manchester police station, all implementations of his machine are the same.

Of course if you add the desiderata useful and reasonable time, processors are every much not equal, and faster is better. We have advanced from the huge, crude, snail-like Colossus and ENIAC that respectively merely broke OKW´s Lorenz ciphers - a step beyond Enigma - and designed the H-bomb, to today´s inch-square marvels that can precisely render the glinting helmet on the snarling head of an orc, decapitated by the sword of your heroic avatar, as it rolls gratifyingly across a virtual dungeon floor. Orc credit

ARM´s processors (and those of its competitors) are embedded in mobile phones, MP3 players, cars, TVs, routers, set-top boxes and a raft of other gadgets. Even its first and simplest model, the ARM 2 marketed in 1986, ran at 8 MIPS, two-thirds of the contemporary Intel 386, workhorse of early PCs. The latest perform at over 5,000 MIPS per core, against the 30,000 or so MIPS per core of Intel´s.

From one perspective, it´s overkill to use the equivalent of 160 ENIACs to drive your car GPS or washing-machine. However, it´s now clearly cheaper to put an overspecified general-purpose computation engine in a controller than to design and debug an optimised one from scratch.

You´d be very hard put to get these embedded cores to run generic programs, though perhaps some enterprising Indian is building a Beowulf computer out of discarded mobile phones. The processors are typically not even distinct physical devices, just segments of larger chips, like Texas Instruments´ OMAP series, nestled with other components like graphics, sound processors, and USB controllers.

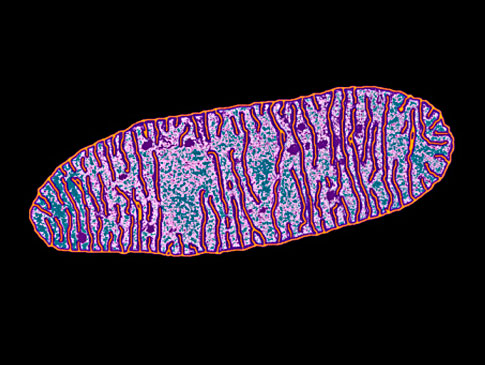

These Turing machines have become computational mitochondria: the bacteria that opted, more than a billion years ago, to live permanently within eukaryotic cells. The deal is that the mitochondria provide the host cell with energy (ATP) in exchange for food (glucose) and shelter (the cell´s wall and cytoplasm).  The mitochondia in the cells of a particular animal are all the same, though they power many different types of cell, from muscle cells to phagocytes to neurones. They are SFIK pretty similar between species.

The mitochondia in the cells of a particular animal are all the same, though they power many different types of cell, from muscle cells to phagocytes to neurones. They are SFIK pretty similar between species.

We like to think as mitochondria as the humble slaves of their host organisms, but that´s just our native eukaryotism speaking. You could equally well see the hosts as elaborate gentlepersons´ clubs for mitochondrial comfort. The commensal contract is a fair exchange. Do you own your cat or does your cat own you?

Mitochondrion photo source

Similarly, ARM´s microscopic generic computation devices power a great range of much larger chips and devices. They are beginning to multiply on chips: a mobile phone controller used to have one CPU, one new design has five - still a long way short of the hundreds of mitochondria in a typical cell. Unlike mitochondria, you can still just see CPUs with the naked eye: 1/2 to 2 millimetres on a side, the thickness of a hand-drawn line. Where the analogy breaks down is the pace of evolution. Mitochondria avoid the hazards of sexual reproduction by pure maternal inheritance. Accordingly their design changes slowly over the aeons, loosely coupled to the evolution of the hosts. Mitochondrial DNA still looks very like that of bacteria, with compact ring-shaped chromosomes. Computer processors are changing under our eyes, driven by Moore´s Law.

It´s a striking oddity that the market for computer processors, the heart of the competitive global electronics industry, is highly monopolistic. Intel has manufactured the great majority of all the mainline, CISC microprocessors ever made. It has usually had competitors, but no more than one or two at a time: Texas Instruments, Motorola, IBM, Cyrix, AMD. In the low-power market, ARM has had things more or less to itself [update: this is overstated - see Dave Jacobowitz´s comment infra], though as mobile and desktop devices converge, Intel and ARM are becoming competitors of each other.

These monopolies have been pretty benevolent. The network economies of standardisation to their direct and indirect customers are huge. That´s why we stick with Windows, and Windows sticks with Intel. Learning to run a different operating system is a considerable investment cost to an end-user. Learning to interface a graphics card or operating system with a different processor architecture and instruction set is a very much bigger one. So there´s one huge barrier to entry.

However, it´s not an absolute one. Processors cannot possibly be marketed as black boxes.

The intermediate customers are highly knowledgeable, and need to be given an enormous amount of information about the processors to be able to work with them. Intel and ARM know that if they bring to market dud devices, or attempt to gouge on prices, or slow down on the innovation treadmill, their potential competitors would tunnel through the externalities barrier and become real ones - or, in ARM´s case, to attempt a takeover, as it´s small compared to many of its customers. Like the Red Queen they cannot stop running.

ARM`s business model is quite different to Intel´s. Intel is an integrated design-and-build manufacturer. It sells boxes, and likes to see its sticker on computers that use them. ARM cares nothing for name recognition and has never sold a box since the demise of the BBC Acorn before 2000, a nice little educational computer in its day, with a prescient name. It´s a pure design shop. The designs are licensed, in immense detail, to device manufacturers all over the world, at around 6 US cents a copy. An example from a recent trade press release:

Taiwan-based United Microelectronics Corp. (UMC), one of [sic] world’s largest semiconductor foundries, is now the exclusive supplier of Kindle Fire ARM processors. UMC is going to supply to Amazon Texas Instruments OMAP4430 through the 45-nano process. The OMAP4430 represents a dual-core 1GHz processor produced on ARM architecture ….

The Kindle processor intimately involves ARM in Cambridge with three customers: Amazon in Seattle as end customer and designer of the complete device; Texas Instruments in Austin as designer of the complete controller to Amazon´s requirements; and UMC in Taiwan as the merchant chip foundry. The latter two are certainly licensees; I don´t know if Amazon also is. One has to marvel at the ability of the Internet to abolish distance in this very high-level technical collaboration. There´s no question that the final product works, and reliably so. Has the coffee-shop physical proximity of Silicon Valley become unnecessary for its successors?

I´m rooting for ARM for one reason beyond chauvinism: power. Its <$10 chips use milliwatts compared to Intel´s traditional tens of watts. Power is increasingly a bigger constraint than performance. In MIPS, ARM´s processors roughly track Intel´s of a couple of years back. Still, its next generation will run 64-bit Windows, in direct competition with Intel for laptops.

Also, and this is more important for global warming, servers. Running a Google search on your ARM-powered iPad uses little power in your hand, but adds to the 258 MW of Google´s ever-growing server farms. These are basically racks of hard drives and hot Intel motherboards. Departing from its usual policy of inoffensiveness, ARM has co-funded a startup in Texas to make server cards using its processors. We should wish them luck. Anyway, real competition - even in a duopoly - will be even better for customers: and very likely the planet.

Babbage´s 1871 Analytical Engine of 1871 was the world´s first mechanical programmable computer. This is just a part that got built. It never really worked, so its delivered MIPS were 0.

Technically ARMs (and any practical digital computer) isn’t a Turing machine. Turing machines have infinite memory. Real computers are finite state automata (FSAs): two steps down on the Chomsky Hierarchy.

You probably know more about this than I do, but memory doesn´t mean RAM, just storage of some sort. The original Turing machine´s memory was an infinite paper tape; and as Wikipedia points out, ¨like a Turing machine, a real machine can have its storage space enlarged as needed, by acquiring more disks or other storage media.¨

As you say, it’s certainly true that memory need not mean RAM and it’s also true that you can buy more memory. However, on the other hand, real computers need to be finitely instantiated which means that there are limits on their memory capacity (ultimately the amount of matter in the universe). It’s not that hard to design computational tasks (e.g., compute a very long series and then read it out backwards) that require arbitrarily large amounts of memory far larger than even potentially available. These tasks are computable on Turing machines but not on real computers. And of course because performance actually is important and the further away you get from main memory the slower things get, complexity analysis becomes relevant for assessing practicality as distinct from theoretical computability.

Well, there are both theoretical and practical concerns with respect to infinite vs. finite memory.

On the theoretical side, making Turing machine memory infinite simplifies many proofs in theoretical computer science enormously. For example, it is easy to show that there is no Turing machine program that determines whether another Turing machine program halts for an arbitrary input. The same is difficult to show for machines with finite memory; this is largely because in theory you could tabulate the results (but that would require a machine with more memory, not a machine with the same size of memory). A proof of the halting problem for machines with finite memory would probably have to involve something like Kolmogorov complexity to show that you cannot encode the function in the memory available.

A practical problem occurs because almost all systems that aren’t specifically engineered for extreme robustness (such as computers used to control airplanes or cars, which must not malfunction under any circumstances) tend to presume that memory is not finite; when they actually encounter an out of memory condition, they tend to crash (if they don’t directly lead to the machine becoming unresponsive from excessive swapping). If they crash while external state (such as a database or EEPROM memory) is not consistent, the result can be difficult to recover from. And memory allocation is such a pervasive operation in modern programming languages (it can occur almost anywhere in a computer program) that it is impossible to do appropriate testing for all cases that can occur (let alone formal verification).

Just a minor quibble: the cell’s relationship to mitochondria was in primordial times “symbiotic”, but in the modern eukaryotic cell this distinction scarcely exists anymore, so much so that it would be almost paradoxical to call a eukaryotic cell eukaryotic without one — simply put, it cannot survive without one…

It´s true that the symbiosis is radical and neither party can back out. The argument for seeing mitochondria as in sense still independently alive is that they reproduce by fission, like their ancestral bacteria. They are not asssembled by the cell´s DNA-driven internal and external machinery like everything else in our bodies.

“In the low-power market, ARM has had things more or less to itself…”

This is kind of a bizarre thing to say. The market for stand-alone embedded microcontrollers is quite fractured, with multiple vendors providing long-lived products in the 4b, 8b, 16b, and 32b space. For embeddedable IP the story is similar, with a few fewer players.

I worked for a company you have probably never heard of with similar 32b IP as the ARM9 plus some very clever capabilities for customization (http://www.tensilica.com). They claim their customers shipped 1B cores in 2011 and will ship 2B cores in 2012.

In fact, unlike the x86 space, the one thing that binds all these companies together is that they’re really not making all that much money. $0.05/core * 1B cores = $50M. That’s why even “mighty ARM” is not a big company, and also why Intel is not in the business of moderate performance low-power cores, but ultimately will be taken down by them as the world sees less and less need to pay the dollar and power tax for x86.

Thanks for the correction, I´ve updated the post. I´m glad you think the low-power design shops will win.

I’m not claiming that Intel will fail, but it will be dragged, kicking and screaming into this much lower margin business eventually. The dominance of x86 is in “traditional” Windows-based computing. That model is already fading. In embedded, it just doesn’t matter as the binary layer will not be exposed to users and even developers will face an LLVM, not a processor. On top of that, in most embedded systems, the question is not “how much performance can I get for X dollars?” but usually “what is the minimum dollars to get X performance?” And the x86 can never, ever win that game. x86 is a dog of an instruction set architecture. Intel, to it’s credit, figured out how to make it go fast, but only through herculean measures that take up lots of transistors (and power). Others without that baggage have a much easier time.

The power advantage of simple processors used to be a side-effect, not the main game, but now I think the market has changed, and it is compounding the cost advantage of these devices.

Even in the cloud server space, simple devices can win because most cloud workloads are “trivially” parallelizable. You’ve got 10M people checking their email, you’ve got all the independent threads you need. If you require 10x the number of 1 GHz ARM cores to serve them as you would have needed 2 GHz x86 cores, but each ARM core uses 1/100 the power, you’re going to come out ahead. People are slowly starting to figure this out, but it does require a different mindset.

These cores are also finding their way into high-performance computing. Check out the DE Shaw protein folding machine or the LBNL Green Flash project. (again, sorry, pitches for my old employer)

So yes, go ARM, MIPS, Tensilica, ARC!

James: Also, and this is more important for global warming, servers. Running a Google search on your ARM-powered iPad uses little power in your hand, but adds to the 258 MW of Google´s ever-growing server farms. These are basically racks of hard drives and hot Intel motherboards. Departing from its usual policy of inoffensiveness, ARM has co-funded a startup in Texas to make server cards using its processors. We should wish them luck. Anyway, real competition – even in a duopoly – will be even better for customers: and very likely the planet.

It’s not just about being green. In the high performance computing world, performance IS constrained by power. Unless you want to deliver machines with hundreds of thousands, let alone millions, along with dedicated nuclear reactors (a single Cray XE6 reportedly can consume up to 2 MW), there’s only so much wattage you can provide to any given place (and you still have to pay for it). That means that in practice, computation power per Watt defines an upper limit on what you’re capable of doing.

Intel’s model is still important because it is still very difficult to write parallel programs (sometimes, such as with cryptographic libraries that use cipher-block chaining for encryption, it can even be impossible) and; thus, you also need very good single-threaded performance for a great many practical applications.

Technical nit: I’m trying and failing to think of a common application where CBC linearity is a bottleneck. The only real applications where crypto performance is likely to be a bottleneck are network and disk encryption, but in both cases its common to break the data down into independent units (packets, records, whatever) each of which has its own IV and hence its own CBC state. This is certainly true of IPsec and TLS >= 1.1. In these cases, oyu can process each unit in parallel, so generally CBC performance shouldn’t be a problem. What did you have in mind?

I think there are important algorithms that are fundamentally non-parallelizable, but they don’t seem critical. As you point out, some crypto is of that nature, but in most situations you are saved by having lots of independent threads to work on. Unless extremely low latency is required you don’t need screaming-fast single-threaded performance. Indeed, you don’t find processors like that in networking equipment, even ones that support IPsec and/or other stateful things. I used to sell processor IP into networking gear that needed to terminate TCP (like certain kinds of network storage) at very high rates, 1Gb/s, 10GB/s. If you’ve got only 1 stream at 10 GB/s, this is difficult with /any/ processor, but real life isn’t like that. Much more likely to have 100 or 10000 streams.

But practically speaking it’s difficult to parallelize even problems that are good candidates for it. For example, MPEG4 has great opportunities for parallelism, but there’s also enough if/then/else “controlly” code to make it hard. And there are some pieces, like CABAC on the front-end that don’t parallelize much at all. So you end up with Amdahl’s law having a real effect.

In the end, people are going to have to learn new languages with new semantics to do parallelism well. We also will have to be parallel-aware when we create standards if we want to end up with good implementations. We may even need new software engineers.

I was simply giving CBC as an example of a problem where it is well-known that it cannot be parallelized without trying to have to explain complexity theory; obviously, in practice, most systems involving encryption will be I/O-bound rather than CPU-bound. But that doesn’t mean that there aren’t important problems that, for all intents and purposes, aren’t effectively parallelizable.

In complexity theory, the hard problems for parallelization are P-complete ones. The class of P-complete problems, in layperson’s terms, are those which inherently require polynomial time algorithms. Somewhat more formally, any problem with a polynomial time solution can be reduced to a P-complete one by transforming its input in polynomial time. (The Wikipedia page lists a few practical examples.)

An unproven conjecture (but one that like P NP, is strongly believed to be true, since decades of research haven’t brought up any counterexamples) is that P-complete problems lie outside the class of NC problems, i.e. the set of problems that can be solved in polylogarithmic time (O(log(n)^k) for some k) on parallel computers (to be precise, on a computer with a polynomial number of processors).

That does not mean that such problems are completely unparallelizable. And if you’re talking about optimization problems, you may be able to get approximate algorithms that are parellelizable. But, in general, the research community currently considers these problems to be inherently sequential.

And that’s something for which the question is whether the problem is even parallelizable; not whether engineering a parallel solution is practically feasible (or worth the effort).

When you start talking about the software engineering aspects of creating a parallel problem, you not only have to consider whether something can be solved in parallel, but whether you can create a program to do that in a reasonable amount of time with a reasonable degree of confidence about its correctness. In practice, writing a correct parallel program that actually gains you an appropriate speedup is more difficult and time-consuming than writing a correct sequential program (not counting so-called embarrassingly parallel or “embarallel” problems). That alone is a major reason why the sequential performance of processors still matters.

I’m certainly not arguing that there aren’t nonparallelizable problems. I was just surprised to see CBC chosen as an example because I’m pretty familiar with CBC-using systems and in my experience that’s generally not a problem in practice.

I wish you hadn’t hung this on Turing machines. First, Turing’s point (and the original paper is quite short and lucid, the kind of mathematical paper that should be taken as a paradigm) was that you needed only the tiniest quorum of circuitry and function, plus memory, to carry out arbitrarily complex computations. Second, Turing used the term to refer to any member of the class of such state-plus-RAM machines, each specialized for a particular task. What you’re talking about is a Universal Turing Machine, which can be programmed to emulate any individual Turing machine, and then reprogrammed to emulate any other one. And that’s not just true of things like ARMs (and Atmels and any of the dozens of other general-purpose microcontrollers). It’s also true of the special-purpose machines you chastise — pretty much all of the current crop of deskside supercomputers are built from graphics chips and the only reason it’s not done with discarded cellphones is that manufacturing technology has made it too hard to reprogram their embedded signal processors and get differently-decipherable data in or out.

But even worse than that, to my mind what you’re really celebrating with the ARM (and it’s worth celebrating, because it’s a commercial success story based on the work of some very smart and wise people) is the fact that modern software is a disaster. The reason that people specify ARMs (which can run the operating system of your choice) instead of microcontrollers, or general-purpose microcontrollers instead of custom circuitry or field-programmable gate arrays (which could do essentially all the same jobs faster and at less cost in silicon and power) is that it’s too damn hard to develop reliable code for those more-efficient devices. In an ideal world, programmers code whatever they needed some piece of hardware to do, and it would compile down to the most effective circuitry for the job with no questions asked. Instead, we have to settle for a big fat (although not as big and fat as Intel’s mainframe-beaters) general-purpose CPU because the billions of copies sold over decades ensure that maybe, just maybe, most of the really bad bugs have been wrung out of the software tool chain.

Paul: ¨In an ideal world, programmers code whatever they needed some piece of hardware to do …¨ But could what we actually see reflect some pretty robust defects in World v.1, making an elegant software-to-hardware chain as you envisage it to be unattainable or at least unaffordable? The demon in Turing´s machine is the human factor.

On that subject, you and other expert commenters are giving me a hard time on my loose use of the Turing machine concept. As I understand it, this was a historic scientific breakthrough that underpinned the first working programmable computers by creating an ideal type they approximated. I think this is sufficiently important for a necessarily inexact popular usage to be legitimate and necessary. Far more people know of Newton´s law of gravitation in a qualitative way (¨mutual attraction of any two masses diminishing by the inverse square of the distance¨) than can write it down. Here, I´m using the concept to draw a conceptual distinction between general-purpose computational devices, by Turing so flexible as to be in a sense equivalent, and more efficient but blinkered job-specific ones. Nobody has I think shown me wrong on this.

James: The fault is not in our machines but in ourselves? Could be. But to my mind you’re still foundering on the general-purpose vs “more efficient but blinkered,” because the blinkers don’t belong to the machines, rather to their users. A few insights here, a few crazy hackers there, and suddenly the ostensibly single-purpose machine becomes general-purpose. That’s why the graphics-card-based supercomputers, or the ones based on a few thousand discarded Playstations.

I think the real story here isn’t so much about Turing as it is about some monstrous hybrid of Amdahl’s Law and Baumol’s cost disease. At a certain point the price of engineers and managers (and time to market) gets high enough, and individual product lifetimes get short enough, that the non-recurring cost of efficiency (general-purpose or otherwise) swamps the potential advantages in unit cost. But that’s no necessarily a good thing.

And now back down to the basement to upload some code to the way-less-powerful-than-ARM cpu that controls my 3D printer…

First thing for you to do is do your homework on what is the market you wish to reach. "Low power broadcasting" is different from "Micro power broadcasting". The former term is more often used to describe stations who have applied for and received official licenses. So the next thing is to approach the FCC. The link below will help, here.

Once you arrive at the frequency and power (and the standards required), you can invest in the equipment. An important item is the antenna. Note that ERP (effective radiated power) means the the power output of the transmitter raised by the gain of the antenna.

Mitochondria generate ATP in cell which produce the energy in our body.