Many people get frustrated by the fact that miracle pills, diets and remedies are announced by scientists with regularity, yet in most cases subsequent research can’t replicate the original “breakthrough”. A recent integration of research studies on Omega-3 supplements (more commonly known as “fish oil” pills) is a case in point.

Early research seemed to indicate that fish oil pills dramatically lowered risk for heart attacks and strokes, not only in those people who had already suffered one cardiovascular event but in the rest of the population as well. A grateful nation bought the pills by the boatload.

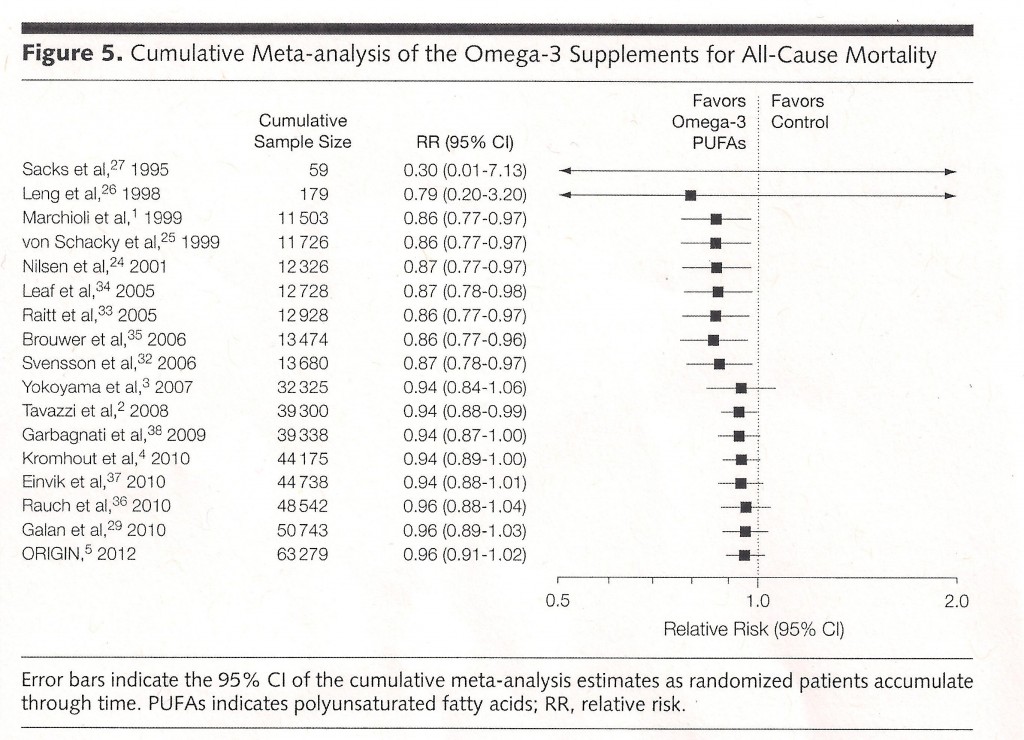

This chart from the recent meta-analysis published in the Journal of American Medical Association beautifully illustrates the deliquescence of the effects of fish oil pills on mortality as research has accumulated.

A Relative Risk (RR) of 1.0 would mean there was no benefit, and a number less than 1.0 indicates a health benefit, and a number greater than 1.0 indicates that the intervention is actually harmful. When there were only a little data available, fish oil looked like manna from heaven. But with new studies and more data, the beneficial effect has shrunk to almost nothing. The current best estimate of relative risk (bottom row of table) is 0.96, barely below 1.0. And the “confidence interval” (the range of numbers in parentheses), which is an indicator of how reliable the current estimate is, actually runs to a value slightly greater than 1.0.

Why does this happen? Small studies do a poor job of reliably estimating the effects of medical interventions. For a small study (such as Sacks’ and Leng’s early work in the top two rows of the table) to get published, it needs to show a big effect — no one is interested in a small study that found nothing. It is likely that many other small studies of fish oil pills were conducted at the same time of Sacks’ and Leng’s, found no benefit and were therefore not published. But by the play of chance, it was only a matter of time before a small study found what looked like a big enough effect to warrant publication in a journal editor’s eyes.

At that point in the scientific discovery process, people start to believe the finding, and null effects thus become publishable because they overturn “what we know”. And the new studies are larger, because now the area seems promising and big research grants become attainable for researchers. Much of the time, these larger and hence more reliable studies cut the “miracle cure” down to size.